Insights

Mastering O1: The Ultimate Guide to Next-Gen AI Prompt Engineering

Introduction to the O1 model

What is the O1 model?

The O1 model is a specialized large language model designed to excel in coding-related tasks—particularly those found in modern web development. Unlike general-purpose models, the O1 model boasts:

- Contextual Finesse: It can retain and reapply context efficiently across multiple interactions, making multi-step problem-solving more streamlined.

- Focused Training Data: Emphasizes code syntax, coding patterns, and best practices in popular web languages such as TypeScript, JavaScript, and PHP.

- Efficient Memory Handling: Designed for minimal “hallucination” when dealing with complex or lengthy code snippets, reducing repeated clarifications.

Why this guide?

This guide aims to teach you how to craft prompts that bring out the O1 model’s strengths. With skilled prompting, you can streamline tasks like:

- Code generation and refactoring

- Bug detection and debugging guidance

- Architectural recommendations and best practices

- Performance optimization suggestions

Whether you’re building RESTful APIs, microservices, or multi-tenant SaaS applications, leveraging the O1 model’s unique abilities can significantly reduce the back-and-forth typically involved in writing or reviewing code.

Why prompt engineering matters for experienced developers

Beyond basic queries

Prompt engineering isn’t just for beginners looking to generate boilerplate code. In fact, experienced developers stand to gain the most by applying sophisticated prompting strategies:

- Precision answers:

- Skilled devs can ask highly targeted questions that yield solutions closely aligned with their architectural goals.

- Example: “Propose a data access layer pattern that integrates with a distributed cache in TypeScript.”

- Time savings:

- Reduces your time spent writing repetitive code or cross-referencing documentation for the nth time.

- Prompt engineering can quickly generate robust scaffolds for advanced functionalities.

- Quality assurance:

- Well-crafted prompts encourage the model to produce code that adheres to recognized best practices and coding standards.

- This leads to fewer security oversights and fewer logic errors.

The evolving role of LLMs in web development

With the speed at which technology changes, LLMs like O1 become an essential partner in staying updated with the latest frameworks, libraries, and best practices. Skilled prompting ensures that the responses are not only accurate but also relevant to modern development workflows (e.g., micro-frontends, serverless architectures, or cross-platform solutions).

Core strengths of the O1 model

1. Context retention

The O1 model can recall large swaths of previous conversation or code, making iterative refinement highly efficient. For instance:

If you feed O1 a TypeScript interface in one prompt, you can reference that same interface by name in subsequent prompts without re-introducing it.

2. Fine-grained code understanding

Rather than just generating boilerplate, O1 is adept at code comprehension—analyzing logic, spotting potential pitfalls, and suggesting improvements. For example:

- Data Validation: Checking if an interface properly reflects optional vs. required properties.

- Performance: Suggesting usage of asynchronous patterns in TypeScript to avoid blocking operations.

3. Error diagnosis and debugging

By providing a detailed error message and the relevant code snippet, you can quickly get pinpointed suggestions for fixes. For instance:

“Here’s a TypeScript function that’s throwing a TypeError: cannot read property 'id' of undefined. Why is this happening and how do I fix it?”

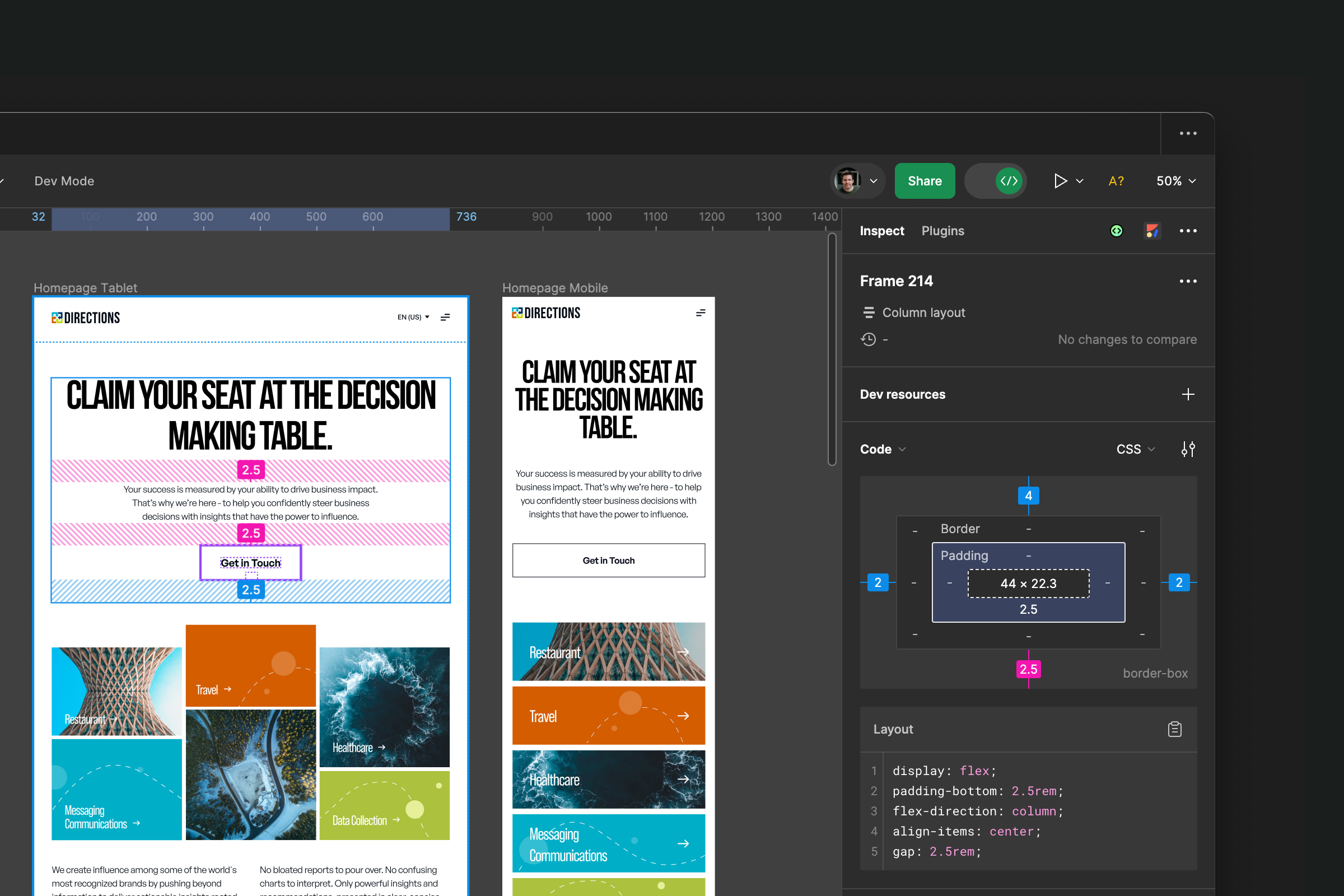

Anatomy of a highly effective prompt

Key components of a prompt

- Contextual setup

- Provide enough background for the model to place your request in the correct domain.

- Include relevant architectural details, version information, or dependencies.

- Goal articulation

- Describe exactly what you want to achieve (e.g., “Implement a caching layer for a REST API,” or “Refactor this code to be more modular”).

- Constraints and requirements

- Outline project-specific constraints: coding conventions, security standards, version constraints, etc.

- Desired output format

- Indicate if you want raw code, an explanatory walkthrough, or an implementation plan. The O1 model can adapt its response to your preferred format.

- Examples or edge cases

- If you expect to handle special conditions or unusual input, specify them.

- This step ensures the O1 model’s proposed solution covers real-world complexities.

Sample “effective” prompt

I have a web application using TypeScript (ES2020) and Node.js 16.

The app performs image uploads and transformations.

I need a function to validate incoming image metadata and convert the file to a standardized format.

Constraints:

- Must handle images up to 10MB

- Use built-in Node.js buffers (no external dependencies)

- Return a Promise that resolves with either a success object or an error message

- Follow functional programming principles

Please provide a complete TypeScript function with inline documentation.

Core prompting techniques: clarity, context, and constraints

1. Clarity

- Be direct, not overly verbose:

- A concise but precise request helps the model maintain focus.

- Avoid “buried instructions” in a long paragraph—highlight them clearly.

- Indicate purpose:

- If you plan to integrate the output into a microservice that handles thousands of requests per second, mention it.

2. Context

- Project background:

- The more the model knows about your environment, the better.

- For instance, specify if you’re using Node.js with a cluster mode or serverless environment (AWS Lambda, Azure Functions).

- Existing code snippets (when relevant):

- Paste relevant interfaces, classes, or function signatures to anchor the model’s suggestions in your actual codebase.

3. Constraints

- Performance constraints:

- Are you dealing with large datasets or concurrency requirements?

- Do you have any SLAs regarding response time?

- Security requirements:

- OAuth2, JWT, or custom authentication flows?

- Data encryption or compliance standards (HIPAA, GDPR)?

By emphasizing these constraints within your prompt, you guide O1 to produce solutions that are not just theoretically correct but practically implementable.

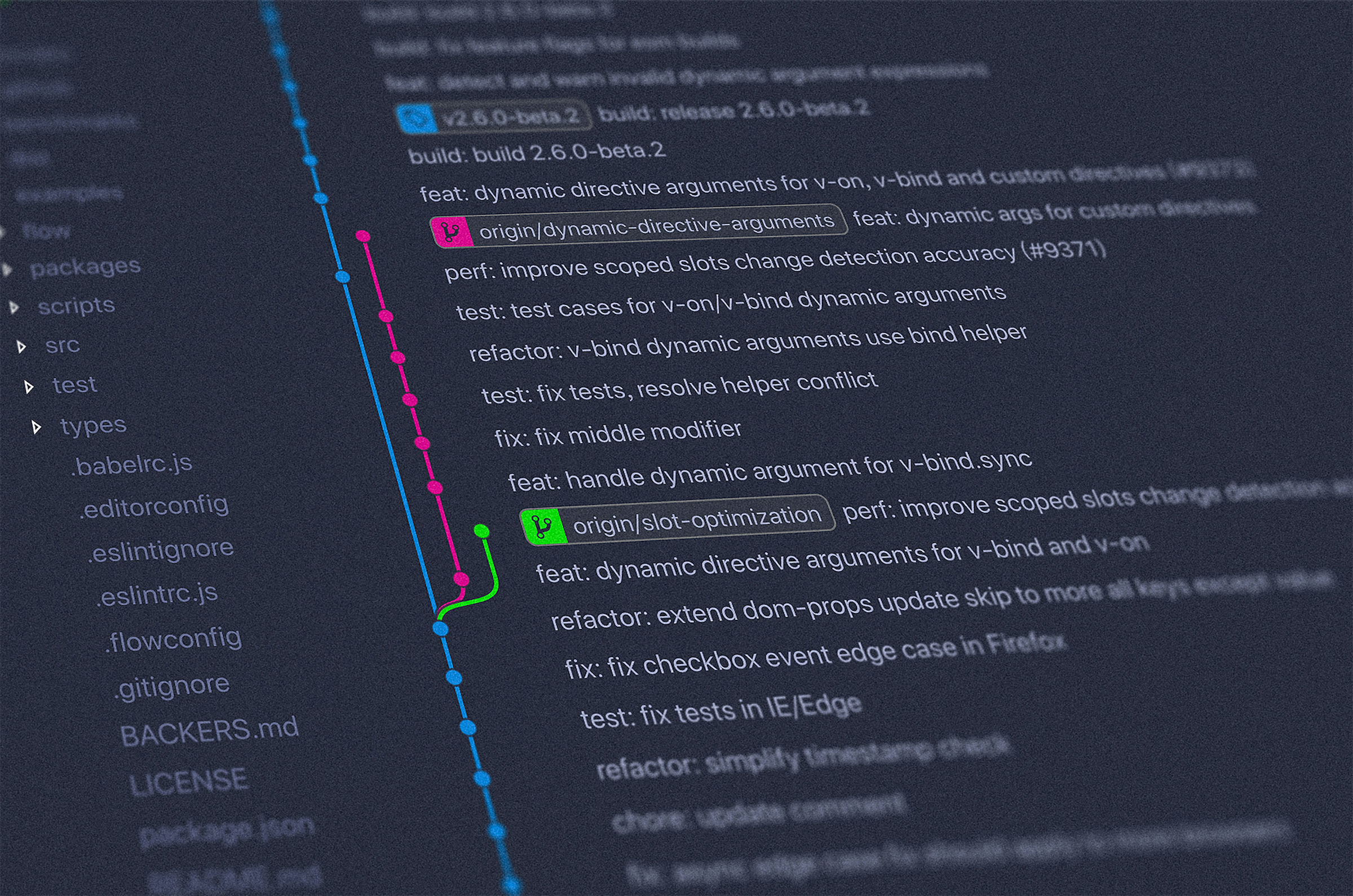

Iterative prompting and conversation flow

Why iteration matters

Even the most carefully crafted initial prompt might not yield a perfect solution. Iterative prompting leverages the O1 model’s memory to refine solutions over multiple exchanges.

- Initial request

- Start with a broad outline or proof of concept.

- Example:

“Generate a TypeScript interface for a user profile with name, email, and an array of roles.”

- Refinement

- Add or modify constraints based on the initial response.

- Example:

“Great. Now ensure the roles are enumerated types, and add a new optional dateOfBirth field.”

- Validation and testing

- Provide O1 with the code’s test results or real-world feedback.

- Example:

“I’m getting a type conflict when roles are assigned. Here is the exact error message…”

Best practices

- Keep iterations focused: Address one major change or question per iteration.

- Summarize changes: At the end of each iteration, confirm the new requirements.

Advanced tactics for complex web architectures

1. Microservices

When working with a microservice architecture, your prompts can guide O1 to:

- Generate shared interfaces:

- Provide separate type definitions for each service, ensuring consistent data contracts.

- Example prompt:

“Create a shared interface for user data that both the AuthService and ProfileService can implement, ensuring unique user IDs across services.”

- Suggest communication protocols:

- Ask O1 about best practices for gRPC vs. REST vs. GraphQL, depending on your performance or data querying needs.

2. Serverless environments

If your web app relies on cloud functions (e.g., AWS Lambda, Google Cloud Functions), clarify these constraints. A typical advanced prompt might include:

We have a serverless function on AWS Lambda that handles file uploads.

It's written in TypeScript and uses S3 as a storage backend.

We need a pre-signed URL generation method that expires in 1 hour.

Ensure minimal cold start overhead and a consistent logging strategy.

3. Multi-tenant SaaS applications

For subscription-based or multi-tenant SaaS solutions:

- Isolation:

- Request O1 to recommend patterns for per-tenant database schemas or row-level security.

- Scaling strategies:

- Prompt for best practices to handle surging traffic from new tenants.

Performance and optimization considerations

Providing the right clues

The O1 model can propose advanced optimizations if it knows your performance goals. Include:

- Traffic expectations: e.g.,

“We expect 5,000 requests per second.”

- Memory constraints: e.g.,

“The container must stay under 512MB RAM usage.”

- Time complexities: If you want an O(N) solution for a data processing function, specify it.

Example prompt for performance

We're processing a large JSON dataset (approx. 200,000 records) in TypeScript.

We need a function that filters out invalid records, sorts by a specific field, and then maps data into a new structure.

Performance is critical; O1 or O(n) overhead is acceptable.

Memory usage should stay under 512MB.

Please propose an optimized approach with a code snippet.

Potential O1 response highlights:

- Stream or chunk processing

- Lazy iteration with generators

- Minimizing intermediate data copies

Security and compliance

1. Handling sensitive data

If your web app deals with personal information (PII) or financial data:

- Encryption:

- Indicate if you need at-rest or in-transit encryption.

- Data sanitization:

- O1 can propose robust sanitization libraries or patterns, especially if you mention the environment (Node.js, Deno, etc.).

2. Compliance frameworks

Mention relevant regulations (e.g., GDPR, CCPA, or HIPAA) so that O1 can tailor solutions. It may recommend:

- Automatic data anonymization for logs

- Strict data retention and purging policies

Compliance-focused prompt

I have a TypeScript-based customer data platform that must comply with GDPR's 'Right to be Forgotten.'

Generate a code snippet that securely deletes user data from our Postgres database and logs a compliance record.

Highlight best practices for auditing these deletions.

Common pitfalls and how to avoid them

- Vague prompts

- Pitfall:

“Generate a data model for my app.”

- Solution: Add clarifying details, such as field types, relationships, or usage contexts.

- Pitfall:

- Ignoring environment details

- Pitfall: Not specifying Node.js vs. Deno vs. browser environment.

- Solution: Provide environment information so O1 can suggest relevant APIs or polyfills.

- Overreliance on a single prompt

- Pitfall: Expecting the model to handle a massive scope in one request.

- Solution: Break large tasks into iterative steps.

- Security oversight

- Pitfall: Forgetting to mention encryption or sanitization.

- Solution: Explicitly ask for secure coding patterns, especially when dealing with user input.

Real-world prompting scenarios

A. generating a WebSocket service in TypeScript

Scenario: You need a real-time chat service that uses WebSockets. You have decided on Node.js 18 with TypeScript.

Prompt example:

We're building a real-time chat feature using TypeScript on Node.js 18.

We need a WebSocket server that handles these events:

1. 'message' - broadcast to all connected clients

2. 'join' - add user to a specific chat room

3. 'leave' - remove user from the room

We also want to maintain a list of online users in a Redis cache.

Please provide a complete TypeScript class or module, including Redis integration best practices.

- O1 strength: Can suggest advanced patterns, like broadcasting with channels, robust error handling, and clarifying how to manage state in Redis.

B. Middleware for logging and metrics

Scenario: You want to integrate a logging and metrics layer in a Node.js/Express application.

Prompt example:

Our Node.js/Express application is written in TypeScript and handles moderate traffic (about 2,000 requests/second).

We want to:

- Log each request (method, endpoint, status code)

- Measure and record response times

- Potentially integrate with Grafana for metrics

Please provide a middleware implementation that demonstrates best practices for this scenario.

- O1 strength: Can produce an idiomatic Express middleware, leveraging existing logging libraries (like winston or pino) and instrumentation frameworks (like prom-client).

C. Worker and queue processing

Scenario: Large background tasks are queued for asynchronous processing using Bull or RabbitMQ.

Prompt example:

We have a TypeScript-based worker service that processes image transformations using BullMQ.

We're dealing with up to 10,000 jobs/hour.

Each job:

- Fetches an image from S3

- Applies transformations (resize, convert to grayscale)

- Saves the modified file back

Ensure best practices for concurrency (up to 5 parallel jobs) and handle job failure retries.

Please provide a robust worker implementation outline with code snippets.

- O1 strength: Ideal for generating an entire blueprint for concurrency, error handling, and job flow control.

Additional resources

- OpenAI’s ChatGPT & GPT-4 Prompt Engineering Guide

- OpenAI Cookbook GitHub

- Shows real-world examples of prompting for code generation, debugging, and performance.

- “Prompt Engineering 101” by DAIR.AI

- dair.ai blog

- Discusses techniques like role prompts, chain-of-thought, and iterative prompting.

- “Advanced Techniques for Code Generation with LLMs” by Microsoft

- Microsoft Developer Blog (search “LLM code generation”)

- Explores advanced patterns in leveraging LLMs to produce consistent, maintainable code.

- Prompt Engineering for Developers (DeepLearning.AI Course)

- deeplearning.ai

- Courses that teach how to systematically refine prompts for better results across various models.

.jpeg)

.jpg)

.jpg)

.jpg)