Insights

From Orphan Pages to Silk-Road Links: Automating Internal Link Architecture

Key takeaways

• Orphan pages can waste up to 30% of your crawl budget

• AI-powered linking tools analyze context, not just keywords

• Python automation can process hundreds of pages simultaneously

• Semantic linking focuses on entities and topical relationships

What orphan pages are (and why they're killing your SEO)

Lets get one thing straight - orphan pages are the silent killers of SEO. They're sitting there on your website, consuming crawl budget, taking up server space, and providing absolutely zero value to your rankings. Orphan pages are content pieces that exist without any internal links pointing to them, making them invisible to both users and search engines navigating through your site structure.

But here's what most people dont realize - its not just about completely isolated pages. You've also got near-orphaned pages that receive maybe one or two weak internal links from low-authority pages. These are almost as bad because they're getting minimal link equity and barely any visibility. I've seen sites where 20% of their content falls into this category, and they wonder why their rankings are stuck.

The real impact? Wasted crawl budget is just the beginning. When Google allocates crawl budget to your site, orphan pages eat up that allocation without providing any SEO value. Think about it - Googlebot is spending time crawling pages that can't rank because they have no internal link authority. Meanwhile, your important pages might be getting crawled less frequently. Its like paying for a gym membership and only using the water fountain.

What types of pages commonly become orphaned? Here's what I see most often:

- Old blog posts that got removed from category pages

- Product pages from discontinued items

- Landing pages from expired campaigns

- Test pages that developers forgot to delete

- Paginated content beyond page 3 or 4

The worst part is that orphan pages can actually hurt your overall site quality signals. Google sees these pages through other discovery methods (like sitemaps or external links), realizes they're not connected to your site structure, and questions your site's organization. If you're serious about technical SEO, orphan page cleanup should be priority number one.

Finding orphan pages - the detective work nobody talks about

So how do you actually find these hidden pages? Most people think running a simple crawl is enough. Wrong. The most effective approach is what I call dual-analysis - combining site crawlers with log file data to catch everything. This method reveals both pages that crawlers can't find AND pages that Google discovers through other means.

Here's the thing - traditional crawling only finds pages that are linked from your site structure. But what about pages that Google discovers through your XML sitemap, external backlinks, or even direct URL access? That's where log file analysis comes in. You need to compare what Googlebot is actually crawling versus what your internal crawl can find. The difference? Those are your orphan pages.

The best detection method I've found uses Screaming Frog's advanced integration features. You can pull in data from:

- Google Analytics (to find pages receiving traffic)

- Search Console (to find indexed pages)

- XML sitemaps (to find submitted pages)

- Server logs (to find crawled pages)

Then you cross-reference all of this against your crawl data. Any page that appears in those sources but not in your crawl? Bingo - orphan page.

Want to know something crazy? Some tools can identify orphan pages automatically by analyzing multiple data sources simultaneously. Tools like JetOctopus and Botify use machine learning to detect patterns in orphan page creation. They'll even alert you when new orphan pages appear, which is huge for large sites that publish content frequently.

The manual approach still works for smaller sites though. Export your sitemap URLs, run a crawl, and use Excel to find URLs that appear in the sitemap but not in the crawl. Its tedious, but it works. Just make sure you're also checking Google Search Console for indexed pages that might not be in your sitemap. Trust me, you'll find surprises. Need help setting this up? Screaming Frog is still my go-to tool for this kind of analysis.

AI-powered internal linking - the game changer

Remember when internal linking meant manually searching for keyword matches and adding links one by one? Those days are gone. Modern AI-powered tools use natural language processing to understand content context and create intelligent linking suggestions. We're talking about systems that actually understand what your content is about, not just matching keywords.

The real magic happens with tools like LinkWhisper and LinkBoss. These platforms analyze your content in real-time as you write, suggesting contextually relevant links based on semantic understanding. But heres the kicker - they dont just match keywords. They understand entities, topics, and relationships between different pieces of content.

How does this actually work? The AI reads your content and identifies:

- Main topics and subtopics

- Entities mentioned (people, places, products)

- User intent and content purpose

- Semantic relationships with existing content

- Optimal anchor text variations

I recently worked with a professional services firm that implemented LinkBoss across their 10,000+ page site. The AI identified over 50,000 internal linking opportunities they'd missed. But more importantly, it prioritized them based on potential SEO impact. Within 3 months, their organic traffic increased by 35%.

What makes these tools special is the learning component. They analyze which internal links actually drive engagement and conversions, then adjust their suggestions accordingly. Its like having an SEO expert who never sleeps, constantly optimizing your internal link structure based on real performance data.

The best part? Integration is stupid simple. Most tools work with any CMS through basic JavaScript implementation. They can even work with static sites through API integration. If you're still doing internal linking manually, you're basically competing with one hand tied behind your back. Magnet's AI expertise can help you implement these systems properly.

Semantic entity-based linking that Google loves

Okay, this is where things get really interesting. Google doesn't think in keywords anymore - they think in entities and relationships. Semantic internal linking means connecting content based on topical relationships and entity connections, not just keyword matches. This is the difference between ranking well and dominating your niche.

So what's an entity in SEO terms? Its any distinct thing that can be identified - your business, your authors, your products, your locations. Google's Knowledge Graph is built on understanding these entities and how they relate to each other. When your internal linking reflects these relationships, you're speaking Google's language.

Here's how semantic linking differs from traditional keyword matching:

- Traditional: Link "SEO tips" to other pages with "SEO tips"

- Semantic: Link "SEO tips" to related concepts like "keyword research," "content optimization," and "link building"

The implementation is more sophisticated too. Instead of generic anchor text, you use descriptive phrases that provide context. For example:

- Bad: "Click here for SEO tools"

- Good: "Discover advanced SEO tools for keyword tracking and SERP analysis"

I've seen this work miracles for topical authority. One ecommerce client reorganized their internal linking around product entities and categories rather than keywords. They created semantic connections between products, guides, and category pages. Result? Their category pages started ranking for competitive head terms within 6 months.

The key is thinking in topic clusters. Your pillar content should link to all supporting content, and supporting content should link back to pillars AND to related supporting pages. This creates a web of topically relevant connections that Google interprets as expertise. Want to understand more about how search engines interpret these connections? Check out our semantic search guide.

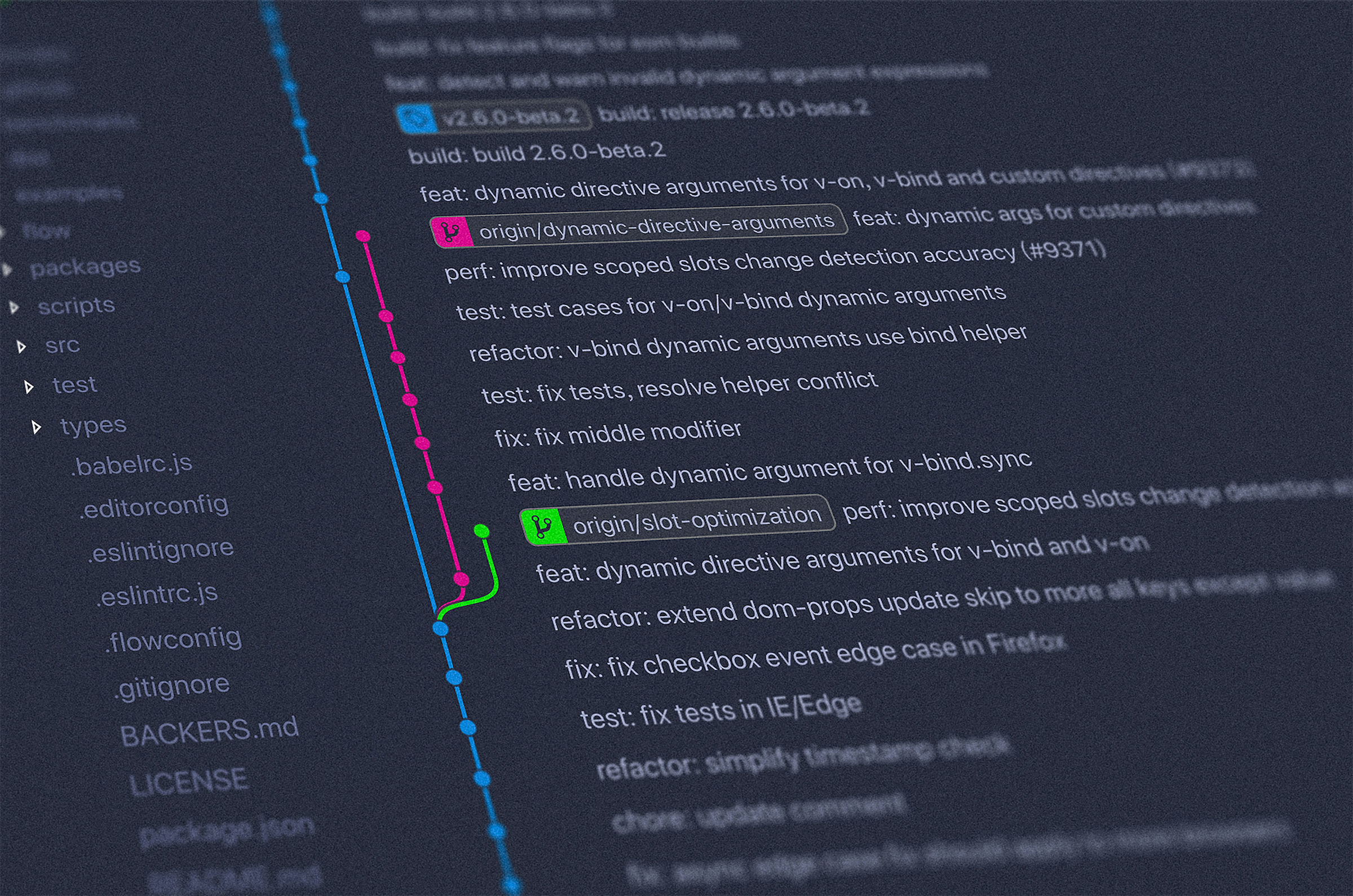

Python automation for internal linking at scale

Now lets talk about scaling this stuff up. Python automation can process hundreds of pages simultaneously, identifying linking opportunities that would take weeks to find manually. The beautiful thing? You can customize the scripts to match your specific needs and integrate with any platform.

The basic workflow looks like this:

- Create a spreadsheet with target pages and focus keywords

- Use Google's Custom Search API to find linking opportunities

- Exclude pages that already have the target links

- Generate anchor text suggestions using AI

- Export results for implementation

Here's a simplified version of what the code structure looks like:

# Search for linking opportunities

def find_link_opportunities(target_url, keyword, domain):

# Use Google Custom Search API

# Exclude pages already linking to target

# Return list of opportunities

# Generate anchor text suggestions

def suggest_anchor_text(content, target_keyword):

# Use NLP to analyze context

# Generate natural anchor variations

# Return top suggestions

But here's where it gets really powerful - you can integrate this with ChatGPT or Claude to generate contextually appropriate anchor text. The AI analyzes the surrounding content and suggests anchors that flow naturally. No more forced keyword stuffing.

I recently helped a SaaS company implement a Python-based system that:

- Scans new content for linking opportunities

- Checks existing internal link distribution

- Identifies under-linked high-value pages

- Generates weekly linking recommendations

- Tracks implementation and impact

The results? They went from adding 10-20 internal links per month manually to implementing 200+ contextually relevant links. Their average session duration increased by 40%, and orphan pages dropped to nearly zero.

Want to build something similar? You'll need solid API development skills and understanding of SEO principles. Or just hire someone who knows what they're doing - the ROI is worth it.

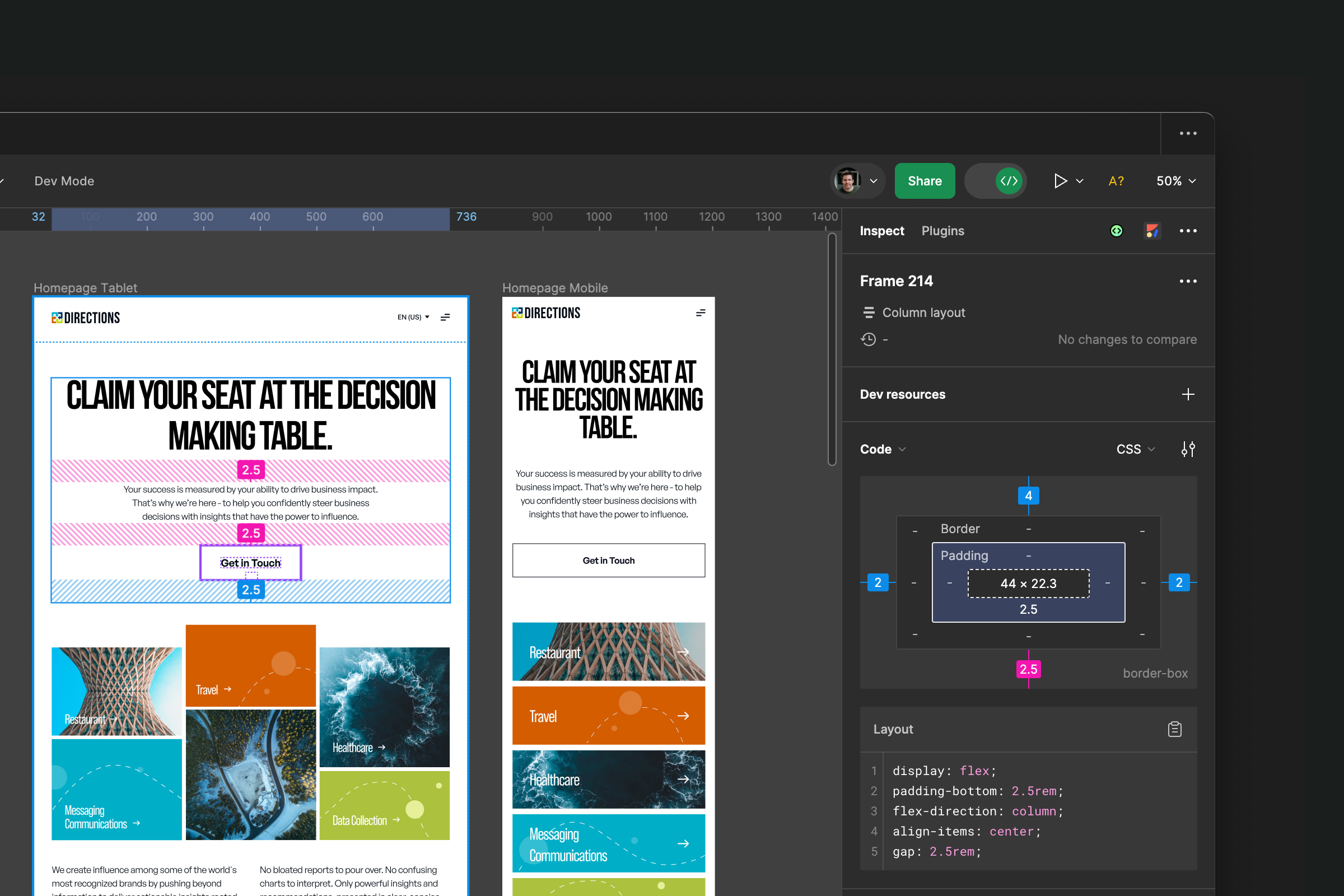

Enterprise tools that actually work

Lets be real - not everyone wants to mess with Python scripts. That's where enterprise tools come in. seoClarity's ClarityAutomate provides end-to-end automated internal linking that works at massive scale. We're talking about platforms that can handle millions of pages without breaking a sweat.

What makes these tools "enterprise-ready"? First, they integrate with any CMS through simple implementation. No need to rebuild your entire tech stack. Second, they provide bulk deployment capabilities. LinkActions, for example, can implement thousands of links simultaneously while maintaining quality control.

Here's what the best enterprise tools offer:

- Automated discovery and implementation

- CMS-agnostic deployment

- Bulk approval workflows

- Performance tracking

- ROI reporting

- A/B testing capabilities

But here's the thing most vendors won't tell you - implementation is everything. I've seen companies spend $50k on tools and get mediocre results because they didn't configure them properly. The key is understanding your content hierarchy and setting up rules that match your site's structure.

For example, a major healthcare provider I worked with used LinkActions to:

- Set up category-based linking rules

- Prioritize links to money pages

- Maintain specialty-specific silos

- Automate seasonal campaign links

The platform handled 2.5 million pages and added over 100,000 internal links in the first month. But more importantly, it maintained their strict content silos for YMYL compliance.

These tools also excel at maintaining link equity distribution. They can automatically identify pages hoarding PageRank and redistribute links to boost underperforming content. Its like having an SEO strategist working 24/7 on your internal link optimization. Need help choosing the right content management system that works with these tools? That's crucial for seamless integration.

Silk-Road links - building resilient link networks

Here's where we get into advanced territory. The concept of "Silk-Road Links" is about creating multiple pathways between related content, just like the ancient Silk Road had various routes between trading posts. This approach builds resilient link networks that maintain connectivity even when individual pages change.

Think about it - traditional internal linking creates single pathways. Page A links to Page B, Page B links to Page C. But what happens when Page B gets deleted or moved? You've got broken connections and potential new orphan pages. Silk-Road linking creates redundant pathways so content remains connected even when individual nodes change.

Real-world example: Real estate websites face this challenge constantly. Properties come and go, but you need consistent internal linking. The solution? Dynamic linking modules that automatically connect:

- Similar properties in the same area

- Properties with comparable features

- Recently viewed properties

- Price-range alternatives

This creates a web of connections that adapts as inventory changes. No more orphan property pages, no more manual linking updates. The system maintains itself.

I implemented this for a real estate platform with 50,000+ property listings. We created:

- Location-based linking clusters

- Feature-based connections (pools, garages, etc.)

- Price tier relationships

- School district groupings

- Neighborhood guide integration

The result? Even when properties sold and listings were removed, the remaining properties maintained strong internal link profiles. Average pages per session increased by 60%, and bounce rate dropped by 25%.

The key is thinking beyond direct relationships. In Silk-Road linking, you're creating a mesh network where every piece of content has multiple connection points. This isn't just good for SEO - it mirrors how users actually browse, jumping between related content naturally.

Advanced semantic rules for 2025

As we head into 2025, the rules for internal linking have evolved way beyond "link to relevant content." Modern semantic rules prioritize entity relationships and search intent alignment over keyword density. If you're not adapting, you're falling behind.

Here's what's changed:

- Thematic hierarchies matter more than ever - Your pillar pages need clear supporting content

- Entity connections trump keyword matches - Link based on concepts, not just words

- User intent drives link placement - Information seekers get different links than buyers

- Context window analysis - Google looks at surrounding content, not just anchor text

The implementation requires sophisticated planning. You can't just throw links everywhere and hope for the best. Search engines now penalize excessive or irrelevant internal linking. Quality beats quantity every single time.

What does this mean practically? Let me break it down:

- Build topic clusters properly - Main topic pages link to all subtopics, subtopics link to each other when relevant

- Use descriptive anchors - "Learn about advanced keyword research techniques" not "click here"

- Maintain link velocity - Don't add 1000 links overnight, gradual implementation looks natural

- Regular audits are mandatory - Links decay, content changes, algorithms evolve

I recently audited a site that had implemented internal linking in 2019 and never updated it. They were using exact-match anchors, linking to deleted pages, and had no topical organization. Their organic traffic had dropped 40% year-over-year. After restructuring with semantic rules, they recovered within 4 months.

The future is about building intelligent, self-maintaining link architectures that adapt to content changes and algorithm updates. If you're still thinking in terms of individual links rather than comprehensive linking strategy, it's time to level up. Magnet's SEO services include advanced internal linking audits that identify exactly where you're falling short.

Frequently Asked Questions

What exactly is an orphan page? An orphan page is any page on your website that has no internal links pointing to it. Users can't navigate to these pages through your site structure, and search engines struggle to find and rank them effectively.

How many orphan pages are too many? Even one orphan page is technically too many if it's valuable content. However, most sites can tolerate 1-2% orphan pages. Anything above 5% indicates serious site architecture problems that need immediate attention.

Can orphan pages still rank in Google? Technically yes, if they have external backlinks or are in your sitemap. However, they'll rank much worse than properly linked pages because they receive no internal PageRank and appear disconnected from your site structure.

What's the fastest way to fix orphan pages? For quick fixes, add links from your homepage or main category pages. For long-term solutions, implement automated internal linking tools that continuously monitor and connect isolated content.

How much do AI-powered internal linking tools cost? Entry-level tools like Link Whisper start around $77/year. Enterprise solutions like seoClarity or LinkActions can run $1000-5000/month depending on site size and features.

Should I use exact match anchors for internal links? No. Google prefers natural, descriptive anchor text that provides context. Use variations and descriptive phrases rather than repeatedly using the same keyword-rich anchors.

How often should I audit my internal links? Monthly for large, dynamic sites. Quarterly for stable sites with less frequent updates. Any major content changes or site restructures require immediate auditing.

What's the difference between orphan pages and dead-end pages? Orphan pages have no internal links pointing TO them. Dead-end pages have no internal links pointing FROM them. Both hurt user experience and SEO, but orphan pages are generally worse for rankings.

.jpeg)

.jpg)

.jpg)

.jpg)